Estimating a scene reconstruction and the camera motion from in-body videos is challenging due to several factors, e.g. the deformation of in-body cavities or the lack of texture. In this paper we present Endo-Depth-and-Motion, a pipeline that estimates the 6-degrees-of-freedom camera pose and dense 3D scene models from monocular endoscopic videos. Our approach leverages recent advances in self-supervised depth networks to generate pseudo-RGBD frames, then tracks the camera pose using photometric residuals and fuses the registered depth maps in a volumetric representation. We present an extensive experimental evaluation in the public dataset Hamlyn, showing high-quality results and comparisons against relevant baselines.

Here you can download the rectified stereo images, the calibration and the ground truth of the in vivo endoscopy stereo video dataset of the

Hamlyn Center Laparoscopic at Imperial College London. The ground truth has been created with the stereo matching software

Libelas. The rectified color images are stored as uint8 .jpg RGB images and the depth maps in mm as uint16 .png.

Also, you are free to download the Endo-Depth depth prediction models trained on our rectified Hamlyn dataset with monocular, stereo and monocular plus stereo losses, and the used splits.

Source Code

The source code of Endo-Depth's depth prediction from single images, the photometric and the others trackings methods and the volumetric fusion is available to use at GitHub.

Videos

IROS 2021 video presantation of Endo-Depth-and-Motion paper in detail.

Endo-Depth-and-Motion: Reconstruction and Tracking in Endoscopic Videos

|

|

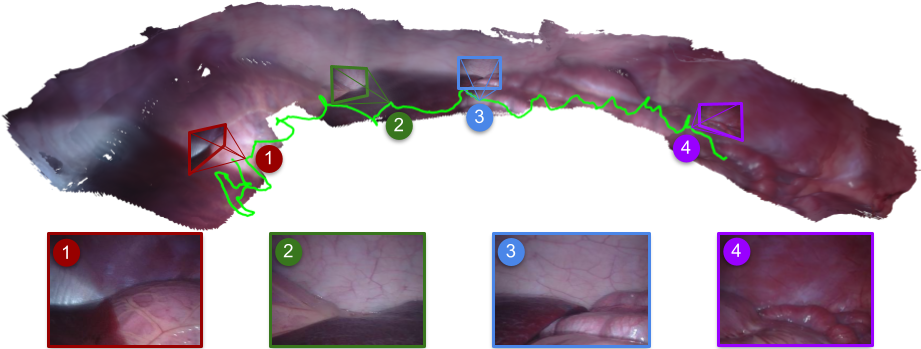

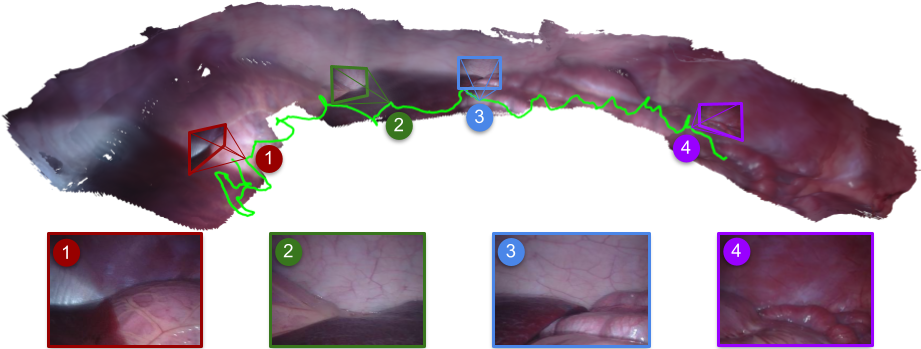

Endo-Depth-and-Motion's photometric tracking and volumetric reconstruction working on several Hamlyn sequences.

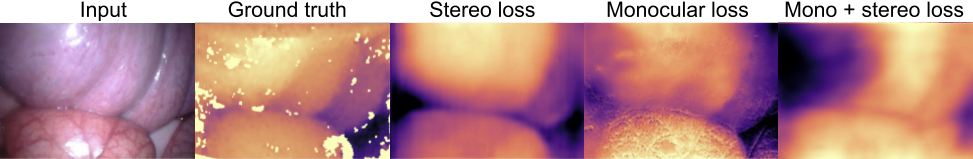

Depth estimation of Endo-Depth and Libelas on Hamlyn dataset

|

|

Depth estimation using the stereo matching method Libelas and the monocular unsupervised deep learning algorithm Endo-Depth (based on Monodepth2), which was trained using stereo loss with a first layer model resolution close to the input images. The depth estimation of Libelas is used as ground truth for Endo-Depth evaluation. The point clouds are made with the depth estimation of Endo-Depth.

Libelas and Monodepth2 estimations have pixel color consistency between frames of the same instant.

Paper and Bibtex

RA-L paper available here

arXiv paper available here

@article{recasens2021endo,

title={Endo-Depth-and-Motion: Reconstruction and Tracking in Endoscopic Videos Using Depth Networks and Photometric Constraints},

author={Recasens, David and Lamarca, Jos{\'e} and F{\'a}cil, Jos{\'e} M and Montiel, JMM and Civera, Javier},

journal={IEEE Robotics and Automation Letters},

volume={6},

number={4},

pages={7225--7232},

year={2021},

publisher={IEEE}

}

|