|

The Drunkard's Dataset

|

| [Download Dataset] |

In the link above you can download The Drunkard's Dataset.The root folder contains two similar versions of the dataset but with different image resolutions, 1024x1024 and 320x320 pixels. Both versions have the same folder structure as follows:

-

The Drunkard's Dataset

-

Dataset resolutions

-

Scenes

-

Difficulty levels

- Color

- Depth

- Optical flow

- Normal

- Pose

-

Difficulty levels

-

Scenes

-

Dataset resolutions

For every of the 19 scenes there are 4 levels of deformation difficulty and inside each of them you can find color and depth images, optical flow and normal maps and the camera trajectory.

- Color: RGB uint8 .png images.

- Depth: uint16 .png grayscale images whose pixel values must be multiplied by (2 ** 16 - 1) * 30 to obtain metric scale in meters.

- Optical flow: .npy image numpy arrays that are .npz compressed. They have two channels: horizontal and vertical pixel translation to go from current frame to the next one.

- Normal: .npy image numpy arrays that are .npz compressed. There are three channels: x, y and z to represent the normal vector to the surface where the pixel falls.

- Camera trajectory pose: .txt file containing at each line a different SE(3) world-to-camera transformation for every frame. Format: timestamps, translation (tx, ty, tz), quaternions (qx, qy, qz, qw).

Check the Drunkard's Odometry dataloader for further coding technical details to work with the data.The Drunkard's Odometry

|

| [GitHub] |

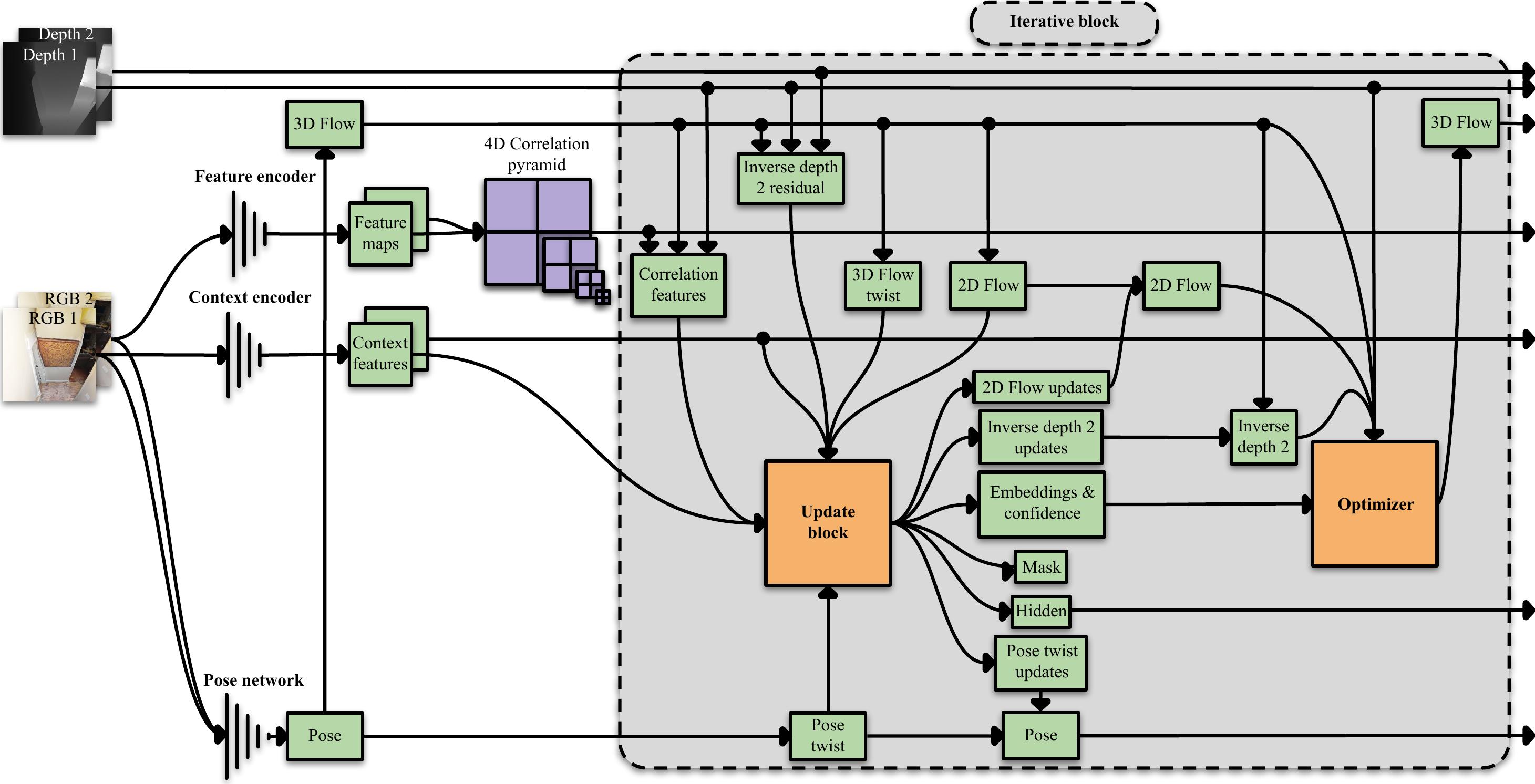

The source code of the Drunkard's Odometry is available to use at GitHub in the link above. There you will find a detailed explanation to execute training and evaluations scripts.

Paper and Bibtex

NeurIPS paper available here. @inproceedings{ recasens2023the, title={The Drunkard{\textquoteright}s Odometry: Estimating Camera Motion in Deforming Scenes}, author={David Recasens and Martin R. Oswald and Marc Pollefeys and Javier Civera}, booktitle={Thirty-seventh Conference on Neural Information Processing Systems Datasets and Benchmarks Track}, year={2023}, url={https://openreview.net/forum?id=Kn6VRkYqYk} }

|